A few days back I uploaded a

Twitter video showing a little

303-ish sound simulator on the Commodore 64.

This came about because someone mentioned 3rd of March as 303 day, and that got me remembering I had a simple player from a few years back that never had quite enough 'bite' to the sound. So I dug that out, played around with the source a bit and added some quick visuals, making sure it was at least 303 bytes or less as a tagline. (it's 301 btw)

I've put the source to the version from the video at the end of this post. As you can guess this is code I wrote without doing much optimization as I only imagined it would be used in that video. :) But anyway, enjoy. Maybe down the line I'll do a much leaner version when I need to use it for something, or post the older version which at least autoruns.

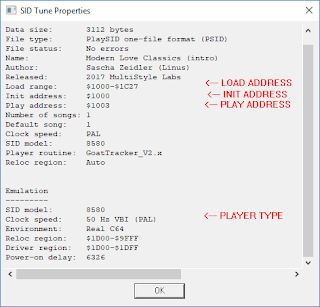

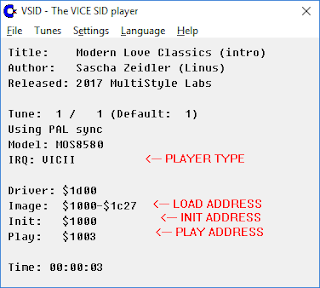

For anyone interested the video was recorded using the VICE emulator, with the 8580 SID and the reSID filter bias set to -500.

Background on the SID chip:So how do we get a 303-like sound on the Commodore 64? Well, we have an advantage with this machine because of the unique SID chip, certainly very different to the

AY,

SN76489 and beepers of other hardware of the time.

It has 3 versatile oscillators (channels) that can produce triangle, saw, square and noise waveforms, plus some combinations of both. The oscillators can also have sync and ring modulation applied (the latter only on triangle waveform), with the previous channel in the set providing the pitch modulator input value. (this is in a round-robin fashion so Channel 1 gets affected by Channel 3, Channel 2 gets affected by Channel 1 and finally Channel 3 is affected by Channel 2)

Each oscillator has it's own ADSR controls for instrument dynamics, and there's a global filter with low, band and high-pass modes that can be applied to any of the oscillator channels.

If this sounds like quite a powerful feature set for a home computer, you might be interested to know the chip's designer was

Bob Yannes who went on to co-found Ensoniq.

As well as these features there are a few more registers at the end of the list that can be used in your code. Firstly there are two Analog to Digital converter registers for the Paddle Controllers, and then there is a Random Number Generator. This uses the oscillator output from Channel 3 to provide an 8-bit number. To get what we think of as random numbers you set the third oscillator to noise, and use the pitch of that channel to control the speed of changes to the random value. You can also use this as a low-quality SID sampler, by playing an instrument on channel 3 and then recording the values that come in to memory. With a bit of manipulation you can play them back through the 4-bit volume register. I did this in the

Type Mismatch demo.

How the player works:To update the player independently of the main loop we point the hardware interrupt ($0314-$0315) at our music player start point. This gives us a regular update every vblank (50fps for PAL, 60fps for NTSC) so we have a steady tempo. As we're using the interrupt in freewheeling mode rather than hitting a specific raster line, it will be triggered wherever the raster beam is when the interrupt is called. This usually isn't a good idea for things you want to happen at a specific time, however for us this is actually useful later in the player as we'll use the raster beam position to pick values.

The player doesn't have a traditional song/pattern setup, only a single pattern with some hard-coded changes to make it get tweaked a little bit over time.

Every pass a counter (located at $60) counts down until it rolls around to #$ff, when this happens it's time to move onto the next note in the pattern. If this hasn't happened the player jumps to the update portion, this handles things that need to happen continually such as the filter sweep being added or checking if the drums have reached their note-off time.

If the counter is #$ff it's reset to the song's tempo (#$06) and the next note is setup, as well as resetting the filter cut-off value and checking if a drum sound needs to play at this new position.

A pattern is 16 notes long, when all notes are played it checks if the amount of cycles for that pattern has happened. If not the cycle count is decreased and we continue. If we've reached the end of the cycle a few things are changed:

* The waveform used for the main melody. (a selection between saw, pulse and triangle)

* The length of the next pattern cycle from a table.

* The melody and ring-mod source notes.

* The drum check value, to skip some types of drum to add some dynamics to the 'song'.

Some of these changes use 'naughty' self-modifying code to directly alter the code as it's running. (so it changes from what was compiled to something else) For small-form stuff like this it can really save you some space, though obviously resetting to initial state needs additional code.

MelodyThe music data comes from the Kernal and BASIC roms in the machine, this is essentially 'free' data as the Kernal, BASIC and character gfx ROMs are in memory when you switch the machine on. As we leave the machine in this state we're free to use them. This data can potentially be any value between 0-255, but as we're looking only for low bass notes we do some BIT math to get something useable.

The C64 oscillators have a full 16-bit range so can be very accurate indeed, however we are only using the high-bit register as the slightly dischordant sound seem to suit these sort of acid lines.

The main melody uses some of the Kernal, I picked $f704 as it had a good string of melodies. (the kernal is at $e000-$ffff by default in memory)

lda $f704,y ; Read byte from Kernal area.

and #$0f ; Remove the top 4 bits of the value so we get a note between 0 and 15.

eor #$03 ; Mess around with the bottom two bits, this is more personal taste for the data I'd chosen.

sta 54273 ; store in channel 1's high frequency value register.

And the ring/sync modulation channel reads from the BASIC kernal. I picked $a340 as a starting point there. (BASIC is at $a000-$bfff) This also removes BITs from the data but leaves a range of 0-63, which means you occasionally get harmonics above the bass note that add a bit of flavour to the sound.

At the end of a pattern cycle the upper byte of the songdata reads are increased by one, so $f704 becomes $f804 and $a340 becomes $a440 to give a new set of notes. Because this was for a video I don't do any checking for wrapping round to the start of memory, so eventually you'll end up just with the same low note for a while as it cycles through (what is probably) empty bytes.

The earlier driver resets the machine after a few cycles so never reaches a blank space in memory.

You could also use the program itself as source data but as you continue coding the melodies will keep changing. Best to leave that bit until the end.

Drums

The drum setup isn't particularly efficient and I suspect I was going to work on it some more in the old player at some point. Anyway, it cycles through a loop of BIT values looking for a match in the drum pattern data. These being:

* $40 - Bass Drum

* $08 - Snare

* $02 - Hi-hat

* $01 - Rest (or indeed anything else, again I think this was going to be extended originally)

If it finds a match relevant values in a few small tables are set for Waveform, Starting Pitch, Pitch Sweep (signed) and the amount of frames to wait before the note-off is played. This doesn't use the modern way of producing drums by cycling through an instrument table to set the waveform and pitch values per frame. That is the setup I used in

In A Loop a few years back.

The pattern data is stored with the demo, it could use ROM data but finding a coherent beat is actually a lot more difficult than you'd imagine compared to looping melodies. I could write a program to check for exact matches in the ROM I guess. I've done something similar trying to cram all of State of the Art's visuals into a 4kb demo.

Changes between the old player and new one:As I mentioned I didn't think the old player had enough 'bite' to the sound, there's a sort of aggressive overtone to a 303 that is difficult to do with just one channel of the SID.

Originally I was using the second channel as a fake delay for the melody. This was done by reading 3 steps behind the main melody's pattern, with the second channel playing at a lower volume than the first. I switched this over to a triangle waveform with sync and ring-tone enabled, which follows it's

own note sequence. This gives some extra harmonics to the sound when above the melody pitch, but also affects the timbre of the main note when below it.

The other thing I did was change how the filter sweep works. In the original player the sweep starts at one point and drops down the same amount for every note. I made two changes, firstly the sweep speed has different values per note, by reading a value from the ROM decided by the position of the raster beam. So it's quite varied and will actually be different everytime the driver is run. Every cycle through the pattern the filter start speed is reset to a variable value between 0 and 127, again using a similar setup to the sweep.

For playback I went for the 8580 version of the SID above the older

6581. The 6581 has a much 'dirtier' sound with filter enabled because the filter adds some distortion to the affected channels, but I found a nice range with the 8580 filter that I preferred this time.

Things that could be added:

Apart from a general refactor and optimization pass (starting with that init code) some possible ideas are:

* Adding Accent and Pitch slide functions to really give that 'acid line' sound.

* Change drums to use instrument tables for more punchy control.

* Autostart when loading.

The old player has a proper BASIC header as it was still way under 303 bytes. But there's also a workaround that skips a BASIC header and uses only 4 byte, but means your code starts just before the tape buffer area. The only problem with this one is it's right next to the default screen location, so you either have to move your screen or hide it. I used this in

mus1k by setting the screen colour to the same as the text. :)

On the subject, if you have really small code (in the 20 bytes range) you can set the start address to $7c and have it autostart for you with no header at all. I used this in

Glitchshifter

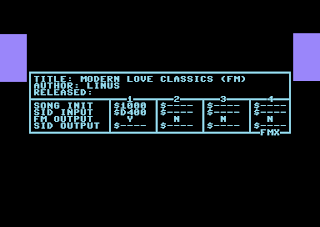

303 source for C64:

; 303 style by 4mat 2020

; Type sys 49152 to play.

; Written using Dasm assembler. Should be mostly compatible with other assemblers except:

; + The processor line is probably Dasm only unless your assembler handles multiple processors.

; + org might be replaced by * or something else.

; + Some assemblers use !byte or something else instead of .byte, see your docs.

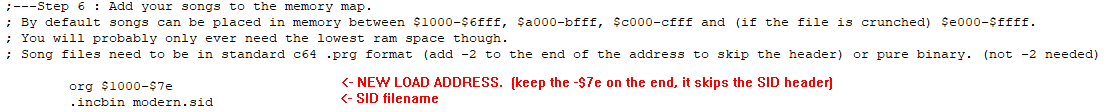

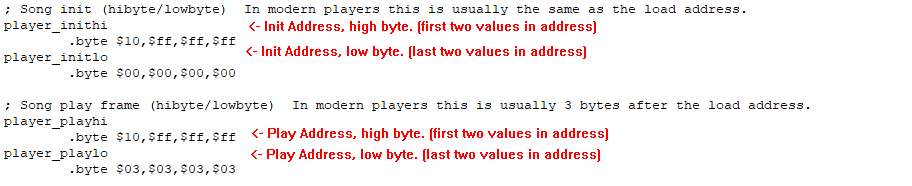

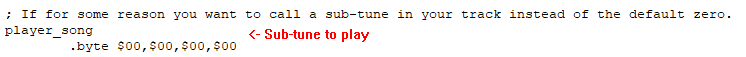

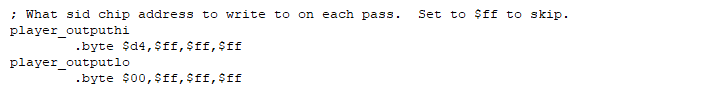

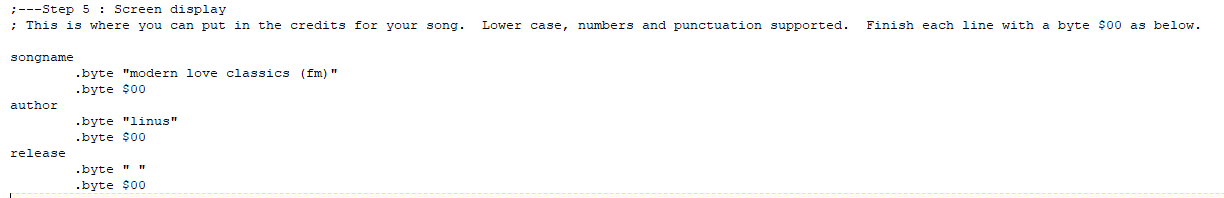

; Memory map at bottom of source. ; Set start address to $c000. (49152) org $c000

processor 6502

; Init Intro.

; Disable interrupts while we do our setup. sei

; Copy song data to zero page.

; This saves a bit of memory as some assembler commands will only use 2 bytes instead

; of 3 if they were in 'normal' memory. ldy #$00

ldx #$30

argha

tya

sta $60,x

lda datas-1,x

sta $0f,x

dex

bne argha

; Setup soundchip.

; By default I've set all channels to use triangle and sync/ring-mod for the first cycle.setsound

lda #$17

sta 54276,y

lda #$08

sta 54275,y

lda #$00

sta 54277,y

lda $1d,x

sta 54278,y

lda $10,x

sta $6b,x

lda $12,x

sta 54295,x

inx

clc

tya

adc #$07

tay

cpy #$15

bne setsound

; Point hardware interrupt at the music player section. lda #<musicloop

sta $0314

lda #>musicloop

sta $0315

; Enable interrupts so we're good to start. cli

; Main loop.

; Only the visuals are updated here. This part runs as fast as the spare CPU

; time will allow. loop

; Take the current filter cut-off value and add 65 to it (so it's in the Petscii area

; of the character set) lda $62

adc #$41

; Use the random number generator to read the oscillator value from channel 3 and use

; this as the X offset position. Because channel 3 is where the drums are, for snares

; and hihats this will be using the noise waveform. ldx $d41b

; Store the petscii char into the screen area. This is repeated 4 times to fill the 4

; pages of the screen. I used a slight offset from the usual $0400 start position to

; move the visuals around a bit. sta $03e8,x

sta $04e8,x

sta $05e8,x

sta $06e8,x

jmp loop

; Music player.musicloop

; Decrease tempo tick, if it goes minus (#$ff) we need a new note, otherwise we can jump

; to the instrument update part. dec $60

bmi musicloop2

bpl updatedrums

; Get new note in the pattern.musicloop2

; Reset tempo tick to full. (in our case #$06 which is about 125 bpm) lda #$06

sta $60

; Make new value to add to filter cut-off for this note.

; Using the current raster beam value ($d012) as an offset, read a value from the

; Kernal ROM and use only the lower 4-bit value. Then add the current number of

; loop cycles ($6b) to make each value slightly different. ldy $d012

lda $e144,y

and #$0f

adc $6b

sta filtsweep+$01

; Get new note values, the current pattern position stored in $61. ldy $61

; First the main melody, using the lower 4-bits from some of the Kernal ROM to only

; use bass values between 0 (silence) and 15. The extra 'eor #$03' is more for

; personal taste with the different melodies.chan1

lda $f704,y

and #$0f

eor #$03

sta 54273

; Now the sync/ring-mod channel, using data from the BASIC ROM, but only taking

; the low 6-bits.chan2

lda $a340,y

and #$3f

sta 54273+7

; Set new Drum

; This checks against 4 possible BIT values to see if a drum needs to be played.

; ($40 = Bass Drum, $08 = Snare , $02 = Hihat , $01 = Rest) noresetsq

ldx #$00

drumcheck

; Get next position value from drum rhythm table. lda $30,y

; Check if value matches the current BIT value indexed. and $20,x

beq nobit

; If it does this means we have a new drum to play.

; Firstly set the waveform from that table. Also store it at $66 so we can apply

; note off later. lda $24,x

sta 54276+14

sta $66

; Set the drum pitch, this is placed directly in a variable as we do work on this

; value when adding the pitch sweep. lda $27,x

sta $67

; Set the drum pitch sweep. lda $2a,x

sta $68

; Finally set the timer value before applying the note-off on the drum. lda $2d,x

sta $69

nobit

dex

bpl drumcheck

; Increase the pattern position by 1 and AND the value by #$0f so it's always

; between 00-15. iny

tya

and #$0f

sta $61

; Check if the value is 00, if not we don't need to decrease the amount of pattern

; cycles yet. bne noupdate

; Decrease the amount of pattern cycles and check if this is #$ff yet. If not we

; don't need to create a new pattern yet. dec $6b

bpl noupdate

; When the pattern cycles are complete it's time to create a new pattern.

; Firstly we do some self-modifying code to the initial value of the drum check loop.

; This means we don't always get the same drum beat by dropping out checks for the

; snare and hi-hats. dec noresetsq+$01

lda noresetsq+$01

and #$03

sta noresetsq+$01

; We also use this value to change the melody line's waveform, from the table at $15-$18. tax

lda $15,x

sta 54276

; We also change the amount of pattern cycles for the next pattern from the

; table at $19-$1c. lda $19,x

sta $6b

; Now we do some more self-modifying code to change the memory position to read the

; note data from. This increase the high memory value by one for the main melody and

; the ring-tone channel. This does mean that eventually both values will reach past the

; end of memory and reset back to $0000. As mentioned in the docs as this was for a video

; I didn't add any checking for this occurance, however the older version of the player resets

; the machine to avoid this happening. inc chan1+$02

inc chan2+$02

; This resets the starting value of the filter cut-off value. It works very similar to

; the filter sweep setup though we start with a 7-bit value, divide it by half and then add

; the current pattern position value to it. noupdate

ldy $d012

adc $e948,y

and #$7f

lsr

adc $61

sta $62

; This is where the player falls through to on every frame. This updates the filter and

; drums and then sends an acknowledgement to the timer system that this routine has finished.

; Check the tempo tick against the current drum's note-off value. If it's the same switch off

; the waveform's gate (bit 1) so the release part of the ADSR gets activated.

updatedrums

lda $60

cmp $69

bne nodrumgate

dec $66

lda $66

sta 54276+14

nodrumgate

; Add the drum's pitch sweep value to the current pitch and store it in channel 3's high pitch

; register. Note that we don't do the math directly on the register because the SID is write

; only when enabled. clc

lda $67

adc $68

sta $67

sta 54273+14

; Set current filter cut-off value to the cut-off register. lda $62

sta 54294

; Decrease the cut-off value by the current filter sweep value. (note this was set in

; self-modifying code earlier) If the value is already below zero don't store it in the

; variable so it only stays at this value.filtsweep

sbc #$00

bmi filtnot

sta $62

; End of music driver, call to IO system that we've ended our routine.

; As we have the full default system enabled we need to use $ea31 rather than the

; less cpu-heavy $ea81filtnot

jmp $ea31

datas

.byte $05,$07

siddata

.byte $f3,$1f,$00

basswaves

.byte $11,$21,$41,$21

length

.byte $01,$03,$03,$03

vols

.byte $a9,$3c,$79

btt

.byte $40,$02,$08,$00

wav

.byte $41,$81,$81

not

.byte $0a,$ff,$20

plu

.byte $ff,$fe,$fc

del

.byte $03,$02,$01

beat

.byte $40,$01,$02,$01,$08,$01,$02,$01,$40,$01,$02,$01,$08,$01,$02,$40

; Memory map:

; $10 = Initial pattern cycles value for first pattern. (datas)

; $12 = SID Filter values for filter type/volume and resonance/channel allocation. (siddata)

; $15 = Melody line waveform table. (basswaves)

; $19 = Melody loop cycle length table. (length)

; $1d = SID Channel sustain and release values. (Attack/Decay are always zero) (vols)

; $20 = Drum BIT value check table. (btt)

; $24 = Drum Waveform table. (silence isn't stored in drum values) (wav)

; $27 = Drum starting Pitch value table. (not)

; $2a = Drum Pitch addition signed value table. (plu)

; $2d = Drum ticks before note off value table. (del)

; $30 = Drum beat pattern table. (beat)

; $60 = Current note timing tick.

; $61 = Current note position in pattern.

; $62 = Filter cut-off value.

; $66 = Drum Waveform setting for use with note-off.

; $67 = Current drum pitch.

; $68 = Pitch value to add to drum pitch every frame. (signed value)

; $69 = Drum note-off timer.

; $6b = number of cycles to loop the current pattern